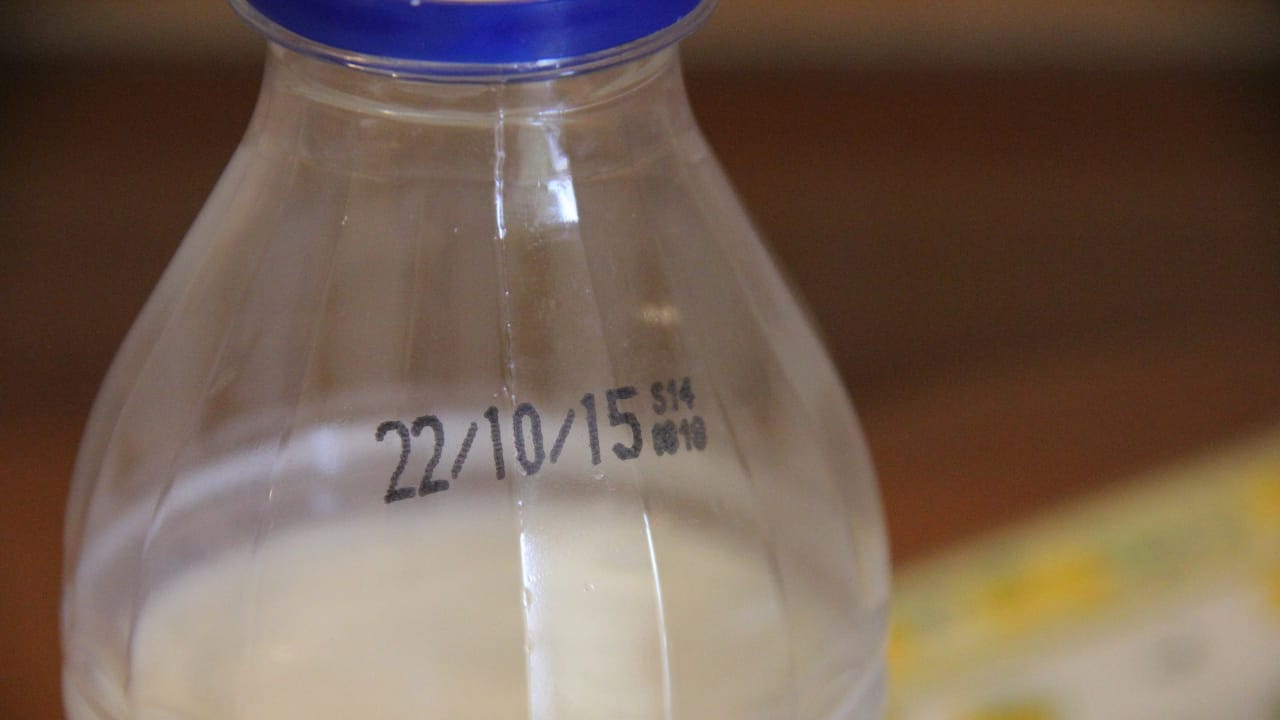

Does statistics, or in this case data science, expire like pasteurized milk or does it have an expiration date similar to that of long-life milk? Or does statistics have the problems of electronic devices that improve every year? Does statistics have a programmed obsolescence, even if that of milk is an expiration made in good faith, or does it have a technological obsolescence, or does it have a natural aging process that little can be done to manage?

First of all, reports with a fixed or scheduled deadline have a certain importance in my experience and it is one of the reasons why in my consultancy service, I usually also offer a follow-up, therefore a subsequent check-up visit, a bit like at the dentist . From the report you can see if a variable , significant, on December 5th, is still the case 5 months later or more.

This phenomenon of significance that can fade is part of a more general phenomenon called data drift.

This is a term that comes from the field of machine learning engineering, which is a very specific branch of statistics. Data drift happens when, for example, the distribution of input data changes as a function of time.

Drift also happens when one trains too rigid models and I don’t mean just the so-called over fit, i.e. models that are too specific, so already in that case you could have a model that works very well with the test dataset and after just 30 days of new data you see that it starts to give you serious problems.

Usually this overfit or overly specific models also come up when you ask for a model measured only on one type of performance. But this is another matter.

There are four types of drifts. The most complicated obviously has to do with the sudden change. In other words, some kind of extreme event, either linked to your target variable or some of your explanatory variables.

In episodes of the podcast (in Italian), I gave various examples of sudden changes. This situation has an unsurprising name, sudden drift.

For example, I built a model to forecast the turnover of your bar and at a certain point the municipality finds the company to which to entrust the contract for the works on the street where you have the bar, removing parking spaces and the convenience of visiting your business for a portion of customers.

Second case: gradual drift. If you sold fax machines, over time people equipped themselves with computers, also because they became increasingly cheaper, and over time they also equipped themselves with an internet connection and consequently also with an email service, so email replaced faxes gradually.

Alternatively, people’s preferences change: once it was fashionable to play a game of soccer with friends or colleagues on the weekends, so the soccer fields had a certain type of regular clientele, now paddle tennis seems to attract much more than soccer.

Third drift: the incremental drift, which in some cases is confused with the previous one. Example: Spam techniques change. Initially the first dangerous emails were of a love nature, you will probably remember the famous case of the email with the subject “I love you”. Clearly it was better known overseas than in Italy. Over time the technique became refined and we had emails with a Nigerian prince asking for help in exchange for a reward. Subsequently, in recent years, we have many spam emails linked to the arrival of a parcel from a courier.

Last drift: the recurring drift, if you like a certain Russian author, you will also like this type of drift, because it has to do with autocorrelations, therefore the cyclicality of events. For example, generally ice cream sellers sell when it’s hot, not when it’s cold (at least in Italy, abroad I discovered with some horror that it works differently). Fanciful hypothesis: ice cream makers sell more in the years of the climatic phenomenon called El Nino. So in the hottest years, obviously there is also a limit, beyond which the heat does not increase your sales.

These drifts obviously need to be monitored, how? With automation, usually. And just as automation decreases certain types of employees, but increases the number of supervisors, the same thing happens with machine learning, in this case machine learning engineering: we move from the figure who knows how to make models, regardless of whether with an IT or statistical approach, to the so-called Machine Learning Operations. It also exists in the software development sector, there it is called Dev Operations, essentially there we have supervisors and/or people who coordinate developers

At this point you may be wondering how to reduce data drift and you will have already seen one method from my videos if you have watched any of them: the sequential analysis which allows for example to keep an eye on the significance of a variable as the number of observations taken into consideration varies, or as time or days increase. Then we have another method to reduce data drift through a check, that is, a statistical test that checks whether two parts of data come from the same probability distribution. Then we obviously have other statistical tests, more or less old, synthetic indicators and statistical quality control methods.

As you may have understood, we are in the engineering sector, I’ll give you the example of industrial chemistry: we have a distillation column (refinery) which in this case represents our model. For the column obviously we have a lot of controls: temperature, pressure and then at the inlet we have the control for the quality of the raw material, the volumetric flow rate of the crude oil, etc. Based on these controls we also have all the necessary automations: closing/opening valves, possible vents, etc. In our case, obviously the test statistics and the rest, metaphorically represent the controls of the distillation column. The valves and vents can metaphorically be represented by stopping the model, retraining it with appropriate precautions.

Re-training can also be automated based on how the control metrics are performing.

A model that expires like milk depends on the fact that there wasn’t one a priori pasteurization, that is, a process that led to a longer-lived model. In economics we have a striking example, even if at the time they did not have the computing power of today. Then there are models that age simply because over time science advances and we have better performing models from various points of view, or even before the models, better performing tools, or following the models, more effective/efficient diagnostics and so on.

But obviously in certain cases we have models that age naturally because perhaps they had a target variable that in effect changes over time.

If you would like to have a model for an important business metric with more limited obsolescence, you can contact me.